The importance of verifying historical drilling data for more accurate project evaluation and decision-making

GTK’s mineral economy services provide Quality Assurance and Quality Control (QA/QC) consulting and project auditing. These services are designed to provide clients with a reliable basis for assessing potential project value and assist in decision-making.

Prior to commencing any exploration activities, it is essential to assess all available previously collected exploration data. This includes digging into the Geological Survey’s publically available databases, reading reports and publications, and scanning all possible geological maps that might be of regional interest. It goes without saying that datasets compiled by any previous project owner(s) need to be carefully reviewed and customized as well. Sometimes such data might comprise an extensive drill hole database used in previous resource estimation. This is clearly of great value but ensuring that the data and interpretations are sound and justified is not always that simple. It is possible to model and reassess historical data with varying degrees of accuracy but the real difficulties occur when the updated estimation is reported in accordance with international reporting standards.

As a general rule, historical data should never be trusted blindly and it is always a reflection of time it was collected. Whenever updating any previously reported mineral resource based on historical drilling data it is essential that the data and earlier conclusions be carefully reviewed and verified. In many cases the historical data have not been collected according to current industry best practices and even after verification might not be of sufficient quality for inclusion within an officially acceptable resource database. However, data can still provide important geological information to assist and speed up further exploration and, therefore, should be utilized effectively (e.g. pXRF, pXRD, hyperspectral mapping, petrography, magnetic susceptibility and conductivity). In an ideal situation, significant cost savings can also be achieved.

When we consider the technical advances in recent decades with respect to the accuracy and precision of drilling, drill hole location tools, chemical assaying and the range of elemental coverage, it is obvious that historical data are unlikely to represent the whole picture of mineralization, leaving plenty of opportunities for revisiting old targets.

From the 1950’s to 1970’s exploration drilling was typically conducted using small Winkey drill or T-46 machinery producing 22 mm or 32 mm diameter drill core. The use of small drilling machinery resulted in greater unwanted drillhole deviations, which were not at that time amenable to accurate surveying of downhole deviation. The small sample size also caused difficulties in accurately measuring the gold values if free gold was present, due to the well-known nugget effect. This problem could have been compounded even further when dealing with substantial core loss in prospective zones, resulting in under reporting grades. Currently the industry uses either NQ (47.6 mm) or HQ (63.5 mm) drilling equipment which provide more stability and often better core recovery, and decreases the volume effect of high nuggetty minerals.

Samples are collected at different volumes (e.g. half diamond core, riffle split sample, mill pulp and fire assay) from the so called “lot” which represents the whole range of materials from diamond core, RC sample material, stockpiles to surface soils. The objective is always to ensure that the sampling is representative of “the lot”. This in turn provides the most accurate information for making a reliable mineral resource model. In gold exploration grades in the range 0.5-1 g/t are already interesting for targeting but may also be economical to mine where present in large tonnages or as a by-product with other commodities. This said, only a very small proportion of gold nuggets compared to the whole volume is needed to generate higher grades. Gold is also a heavy element, which tends to settle differently to lighter elements. If sample size is too small, there may not be enough of the mineralized component to represent the lot. Therefore, designing a sample protocol to suit the specific geological characteristics of an exploration target is essential. The volumes for which grades are estimated are commonly up to 1 million times the total sample volume on which the estimates are based. Obviously, raw data must be of high quality.

Laboratory methods have also become more accurate, precise and cheaper with shorter turn-over time. In the 1960’s the Fire Assay (FAAS) technique was developed and since then this has been a popular method for analyzing precious metals (e.g. Au, Ag, platinum-group metals) in commercial laboratories. However, precious metals were not necessarily measured systematically and lacked the QC system with insertion of standards, blanks and duplicates to monitor the laboratory performance. Therefore, it is essential to first carry out a re-assaying program to check the old assay data. Later, twinned hole verification and other opportunities to increase data value should be assessed (e.g. re-measuring the collars with Differential GPS and conducting new downhole survey measurements). This technology together with traditional metre marking of core samples enable accurate positioning of the mineralized intercepts in the 3D environment.

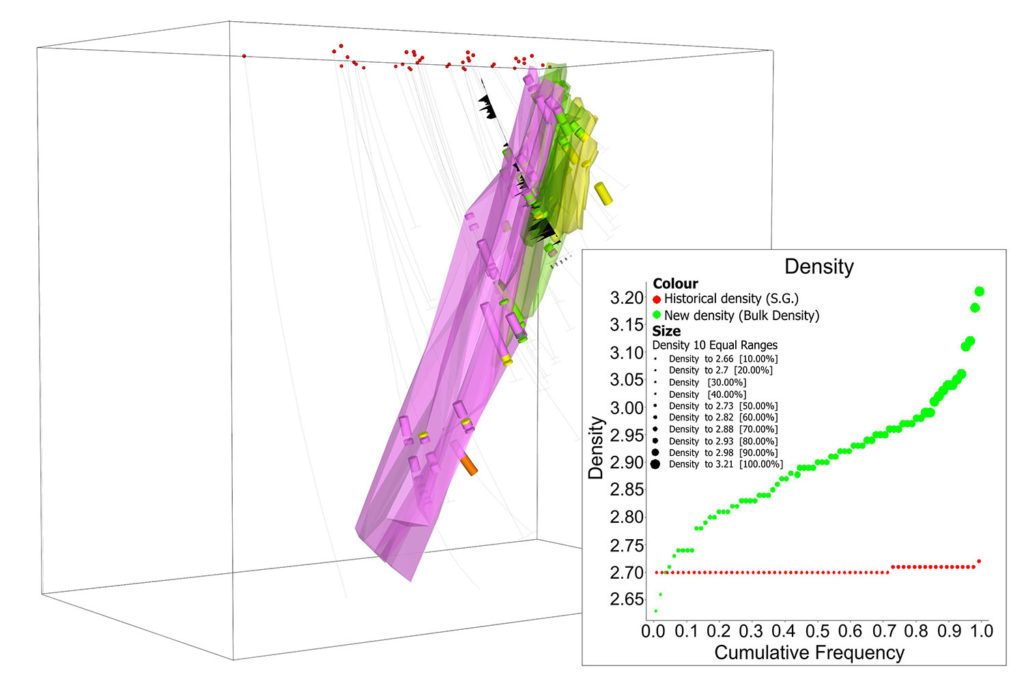

Mineral resource estimations rely on three main inputs: 1) grade, 2) volume and 3) bulk density, of which the latter is often somewhat neglected during mineral exploration. The checking of old density data should also be of top priority when verifying historical data (Fig. 1). Poor quality bulk density measurements result in unreliable tonnage estimates. When conducting bulk density measurements, separate QA/QC procedures should be in place to monitor the accuracy and precision.

The GTK has carried out several historical data reviews and validation of old drill hole data sets. Recently, GTK experts provided the client with a data review and validation together with updated 3D and block model estimation. Data verification included the following steps:

1. Re-assaying of representative set of drill core samples in a commercial accredited laboratory with the insertion of external QC-samples,

2. Conducting of bulk density measurements from different estimation domains using the water replacement method,

3. Checking of lithological units against original logging sheets together with susceptibility measurements, and

4. Mapping of volcanic facies and alteration to understand the depositional environment and help in future exploration targeting.

In addition, the work included 3D wireframe modelling, geostatistical analysis and block modelling. Overall, the work provided the client with a high-quality data package which assisted the project value evaluation and decision-making.

Text: Janne Hokka

Janne Hokka (MSc, EuroGeol) is a resource geologist in the Mineral Economy Solutions Unit at GTK. He has been working for the Geological Survey of Finland since 2012. Janne provides client services in 3D modelling, QAQC, mineral resource estimations and resource audits. His current research interests are in VMS Mineral Systems in Finland which are part of his ongoing doctoral studies. During his career Janne has worked as exploration geologist in Finland, Africa, Middle-East and Australia in different exploration and mining related projects.